I Returned To Programmer Hell To Read Over 3000 Pages To Prove NIH Has No Security

Wuhan Outbreak Investigation Part 2

Jump to here if you just want to see the end results and skip how I managed to pull it off.

Previously it turned out I couldn’t read Fauci FOIA emails correctly and made a mistake. Doing a root cause analysis I realised I was trying to read, essentially, too many pages (thousands!) at high speed so I was skim reading faster than normal and thus not taking in the information properly.

That is because, as noted before, the FOIA emails are primarily in image format and thus you cannot do a conventional text search on them. So the best way to solve my inability to read? Get a robot to do it. Grab your sick bags, it was time for Operation Read.

The PDF Crashed My Laptop

The PDF was over 360mb, and the Python library I was using that was supposed to convert PDFs to images started eating huge amounts of RAM until my laptop from ye olden times crashed.

I suspect the library developer did something painfully inefficient and kept loading separate copies of the PDF for every single image they were trying to convert. Remember kids, use pointer references.

I know they did because I watched it jump 379mb+ every second in memory until it hit RAM ceiling (a mere 8GB) and crashed the OS. Running out of memory is common for me in these Big Data projects. You’re supposed to use a dedicated server, not an antiquated laptop from before the wheel was invented, but The Daily Beagle isn’t financially viable yet (be sure to become a paying subscriber if you haven’t already).

As mentioned previously, I used paying subscribers’ money to upgrade the RAM on this laptop to reduce the number crashes and improve the quality of research I do for articles, to great success. So thank you! No sports cars or wine bottles here!

Use A Different Approach, Write Custom Software

I used a pre-built library installed on Ubuntu instead, to convert the PDF into separate PDF “pages”, so image conversion would no longer choke on the entire PDF.

Once images converted, I blew the dust off an OCR reader I built back in 2021, used previously to translate Chinese documents to investigate their infiltration into the US.

I removed old library references from the OCR reader or the tool wouldn’t start. It choked on the fat and large images of the pages. Again, you’re supposed to use a dedicated server. I built one that ran on a hamster wheel. No, I’m not going to use Google. F— Google.

Any image with a dimension greater than 2000 on either side would cause the program to chew up more CPU than a BitCoin mining operation run on Common Core Math - with Python thankfully auto-terminating it.

When I reviewed the culprit, I found the page had a lot of whitespace - dead area with no text in them, a visual depiction of Joe Biden’s brain.

I wrote code to auto-crop to just the area involving text and gave a 10 pixel buffer to make sure the OCR could still interpret. I also cropped to an even number on both dimensions - this would make scaling it down by half easier to do and less likely to distort the text.

Smug I had peak efficiency than my precedessor (myself), I run it looking forward to how fast it was going to run. Then code fell over. What?

NIH Stamp IDs Everywhere, And It Sucked

Investigating, I found the NIH FOIA team annoyingly stamped ID numbers at the bottom of the page (rather, than, say, at the top), which means even if there was just one word at the top, the text at the bottom guaranteed the entire — blank — page was loaded, causing the OCR to scream defeat.

So I measured the pixel offset of the annoying tag and got the code to auto-crop the bottom piece off so it wouldn’t keep forcing pages to be bigger than they needed to be during the auto-cropping. This would mean for pages with little text, the page size would be smaller than 2000px on either dimension.

Annnnd… it still fell over. Some pages were bloated with text. My past self had written an auto-resizing piece of code that was designed to cut the page size in half, but these page images were huge (over 5500 pixels height-wise), and cutting them in half (2750px) wasn’t enough to bring it below 2000.

So I introduced a resize loop. Whilst either dimenion was bigger than 2000, keep resizing it down by half. Yes, I’m aware the if-statement is unnecessary, it was left in if I needed to revert the code. Yes, the loop would affect quality and resolution, but the 2000 pixel cap was absolute, anything above guaranteed the OCR would stop working.

What Data Format To Store It As, Anyway?

With a single test complete where it didn’t fall over, I wondered what the data storage format would be. I settled on the ‘big no-no’ of individual text files for each page. I can sense the file admins screaming now: ‘do you know how hard that is going to hit disc reads?’. Eh, I’m used to slow loading. I cook meals during my sessions.

This might seem counter-intuitive from a Big Data standpoint first - normally you should use a nice, efficient, clean, searchable database - but there were a couple of advantages for text files.

First, it was easy for me to create an individual page if the tool did not load it correctly. Second, it was easy for me to find an individual page using a file search so I could jump directly to the ‘page’ and check the contents.

Plus, this was an interim format, which would allow me to check for which pages were missing and make them myself if necessary. Third, I didn’t need to make some complex database wrapper using SQLite that was guaranteed to add another two days dev time to get right minimum, so I avoided it to develop the code faster. Fourth, most users are not tech literate and wouldn’t know how to use a database anyway, and I wanted this to improve accessibility, not make it harder in a proprietary format.

I ran the code again and like Joe Biden on a bicycle, it fell over again.

The Classic ‘F**k You For No Reason’ Error

In programming, especially in very important projects, you will get the ‘f**k you for no reason’ error. You will waste your time trying to work out what went wrong only to understand… there’s no reason for the error to exist. It just does.

Out of 3234 pages, I got 64 individual ‘f**k you for no reason’ errors, where the OCR did not want to read a perfectly fine page ‘because reasons’. The error messages were unhelpful and the images appeared to be the right size and readable.

From what I could make out the OCR was trying to write some ‘out-of-bounds’ unicode character that didn’t exist in the text, and I have no idea why the OCR programmer would implement character recognition for a character they didn’t have in their OCR character index. So I did what all pro programmers do when they encounter such an error.

I ignored it.

Hey, Try This

I used a try-catch (try-except in Python) to bypass the few pages that did not want to play ball and made a note to manually produce the text (or use an online OCR tool) to convert the missing pages at a later stage.

I left the code to run in the background with a cooling pad so the overworked CPU didn’t melt whilst I read more Wuhan outbreak documents and tried to write the gain-of-function article.

It was not pleasant: letter entry lagged due to the CPU screaming under the weight of the OCR, and sometimes key inputs were ignored entirely. At one point Firefox itself froze where it entered a weird state where I had to switch windows to change tabs and nothing was interactable. It is the first time I’ve ever seen Firefox do this. Thankfully Substack auto-saves.

For some odd reason it had no issue running YouTube videos during this CPU intensive time. Weird. It was like something wanted me to procrasinate.

OCR Completes, More Work

I wrote more code to essentially test if files were ‘shallow’ (contained little/no info) or if they were missing. I found 64 missing (due to errors) and less than 8 were shallow (containing blank lines of nothing).

It is no good if I go ‘ta-da’ and someone points out a bunch of pages are missing and then starts wondering if I’m some sort of fraudulent hack who can’t read email pages properly. Can’t have readers thinking I’m illiterate or stupid or they’ll stop paying me money (although I dunno, politicians seem successful?)

I manually dealt with the 8 shallow files first. I cross referenced the shallow text with the original images and manually either wrote data in square brackets (for images with no text) or wrote what was on the page. Only one was ‘intentionally left blank’.

The 64 I realised were time consuming, as they were fully fledged pages with large chunks of data on them. And not just pages of chunky blocks of redacted material.

So I wrote a script where the program would open a new instance of the text file, open a copy of the original image, and wait until I pressed enter in the command console to show me the next set. I am become human OCR, destroyer of images!

That way I didn’t have to waste time manually opening each file. It was a lot quicker, and using an online OCR tool to read the lengthy segments and manually transcribing the shorter segments - along with square bracket comments for images - I managed to get the fixes done in about 2-3 hours. Work smarter, not harder.

Look For Obvious Typographical Errors

So I wrote code to finally merge the text files into a compiled single text file copy with page numbering. Except when I ran it, it didn’t number the ‘pages’. So I fixed it and ran it again.

Then I noticed the OCR liked puking up an awful lot of new lines. These are the gaps between sentences, like the one above. It had between 5 to 10 new lines between individual letters for reasons I could not comprehend. I did not want to ‘squish’ the text together because the OCR is WYSIWYG [pronounced ‘Wizzywig’] (What You See Is What You Get), so I wrote it to suppress anything that exceeded three new lines.

I started to skimread for OCR reading errors. There’s quite a few. Too many for me to correct and this isn’t supposed to be a word perfect version because proofreading 3234 pages would take me until my old age to do. I corrected some obvious mis-reads but I didn’t want to get too aggressive on corrections, in-case I started correcting original mistakes by the FOIA’d authors.

Ideally what you should be doing is using it to search for text, and then when you find it, you scroll down that text section until you see the page number, then you go look up the page number in the original FOIA PDF.

I can now tell you what I’ve found using the OCR tool. And it is a stunner.

The Results Are In

I found that…

NIH Were Aware Of “Enhanced Disease” Issues Back In March 2020

Found on page 18, this is information the NIH publicly suppressed as ‘false’.

Steve Black of SPEAC (‘Safety Platform for Emergency vACcines’) project run by CEPI (Coalition for Epidemic Preparedness Innovations), a Norwegian Association who ‘accelerate the development of vaccines’ (read: cut corners on safety testing to bring a dodgy product to you, faster) comments that given previous SARS vaccines caused “Enhanced Disease” that there is a risk SARS-CoV-2 shots will as well:

Odd the NIH failed to mention this concern when suppressing reports of VAED (‘Vaccine Associated Enhanced Disease’) and VAERD (‘Vaccine Associated Enhanced Respiratory Disease’).

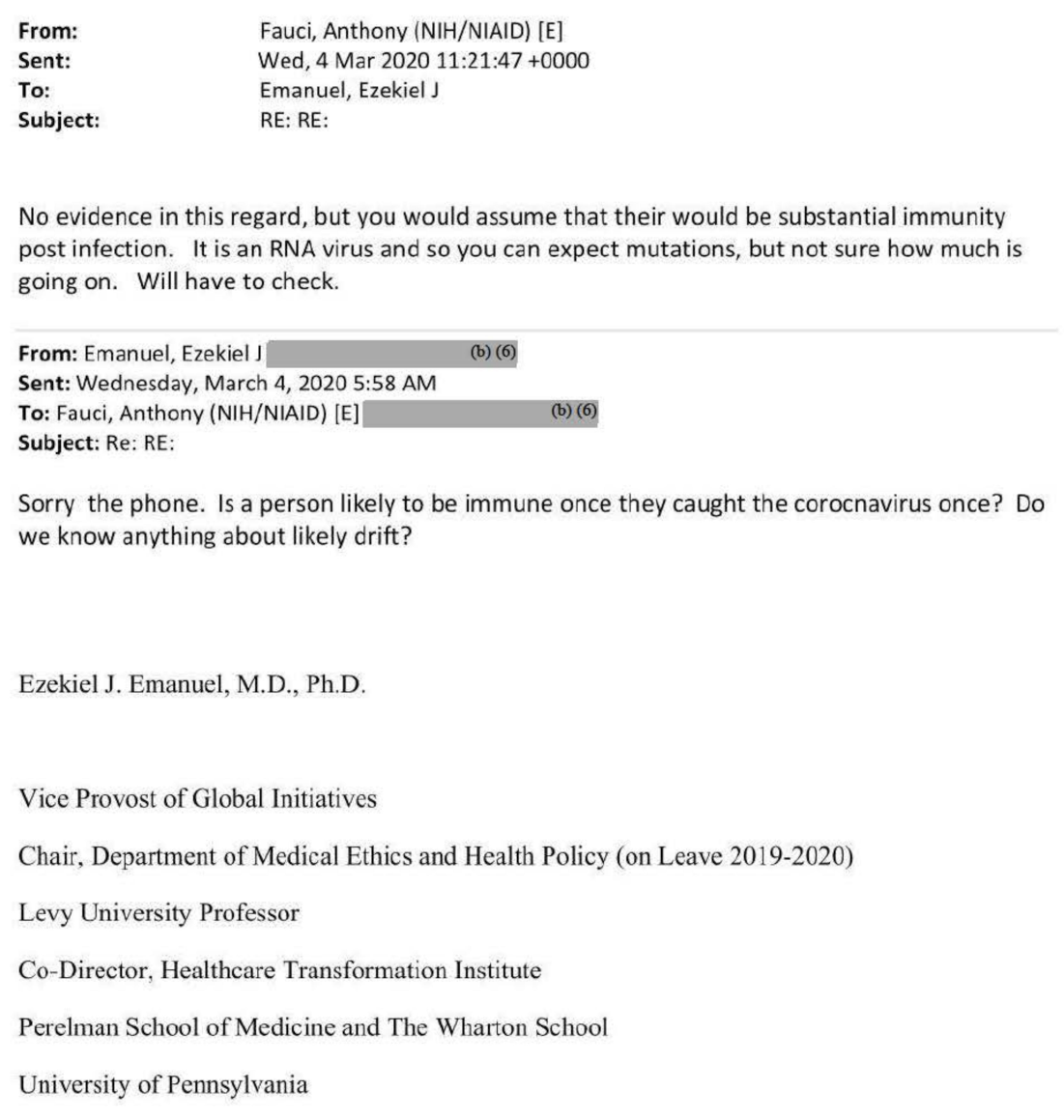

Fauci Admits Natural Immunity To SARS-CoV-2 Is Likely

Found on page 22, Fauci is asked a question by Ezekiel about immunity. Fauci replies “but you would assume that their[sic] would be substantial immunity post infection”. Yes, yes you would. Odd the CDC, NIH and others don’t acknowledge that it exists, then.

The NIH Were Already Discussing Boosters Back In March 2020 Long Before Anything Was In Development

Rob Klein, a pharmacist, obviously shilling for pharmaceutical products, proposes using a “booster of an existing vaccine”. Before any SARS-CoV-2 shot was even beyond planning stage.

Fauci’s Dad, Stephen Fauci, Was A Pharmacist Who Ran The “Fauci Pharmacy”

Shilling for pharmaceutical products runs in the family. On pages 996 to 1002 is a nauseating blurb gushing over the family history of Fauci. Oddly, Fauci never publicly disclosed this giant conflict of interest, I wonder why?

Jesuits Wanted To Interview Fauci Over His Jesuit Education

Below is from page 45, but you can also see references on pages 998, 1000, 1129, 1325 and 2346.

NIH Failed At Redactions

There were so many failures in FOIA (b)(6) redactions - too many for a list of pages - that it is laughable the NIH thinks it can even keep us safe from any disease spread.

Notable failures included three of Fauci’s government email addresses were exposed, including:

anthony.fauci@nih.gov on page 951

afauci@niaid.nih.gov on page 1102

Anthony.s.fauci@nih.gov on page 1123

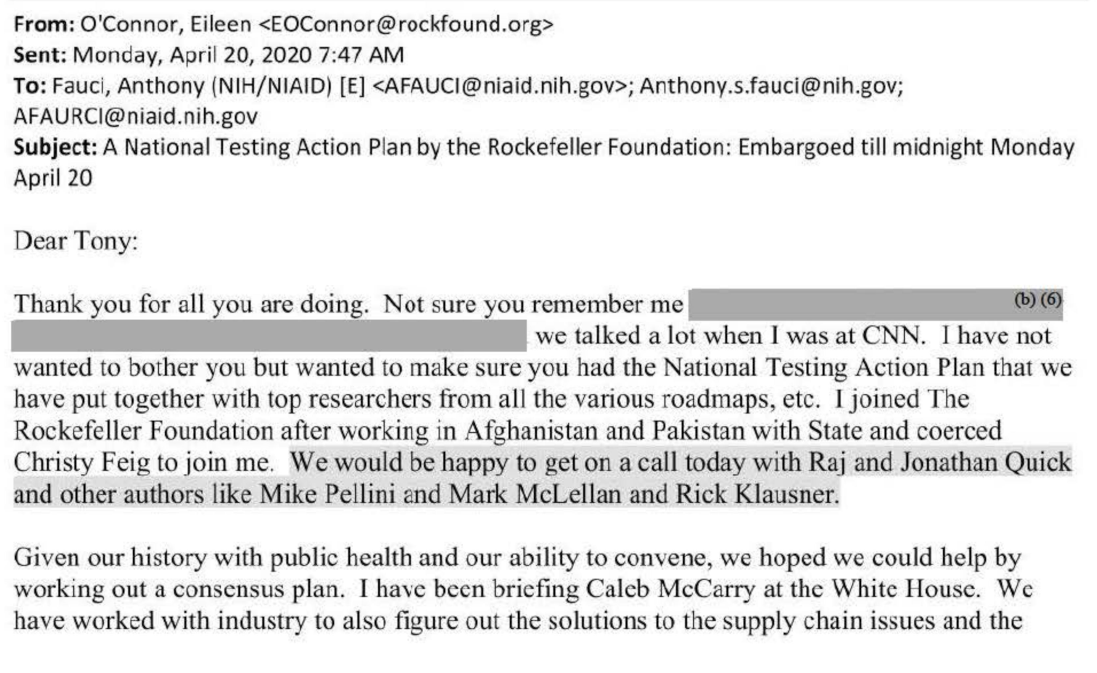

The last one is particularly damning because it shows Fauci talking with the Rockefeller Foundation (expanded below).

The NIH Fails To Properly Redact Names

On page 1123, notice not only are the emails expected, but the second grey highlighted text discloses names. It also interesting notes a comment by Eileen O’Connor who remarks she “coerced Christy Feig to join me”. How did you coerce them Eileen?

Jonathan Quick previously was President and Chief Executive Officer of Management Sciences for Health from 2004-2017, has served as director of essential medicines at the World Health Organization, advisor for health system development and financing programs in Afghanistan and Kenya, and clinical director and chief of staff at the U.S. Public Health Service Indian Hospital in Talihina, Oklahoma (publicly stated here). People will recall Indian Health services were embroiled in cover-ups and fraud previously.

Mike Pellini was hired to the board of directors of Adaptive Biotechnologies, is ‘managing partner’ of ‘Section 32’, was CEO of Foundation Medicine.

"Mark McLellan" is likely a typo by Eileen, as the Rockefeller foundation has a Mark McClellan on their website:

Mark McClellan served as commissioner of the United States Food and Drug Administration under George Bush 2002 to 2004, as admin of the Centers for Medicare and Medicaid Services 2004 to 2006. He’s currently director of the Robert J Margolis Center for Health Policy and the Margolis Professor of Business, Medicine and Health Policy at Duke University and works for the Rockefeller Foundation

Rick Klausner is chairman of Lyell Immunopharma, Inc, a company that describes itself as a “T cell reprogramming company dedicated to the mastery of T cells to cure patients with solid tumors”.

He is also on the board of Sonoma Biotherapeutics, previously worked for the US National Cancer Institute up until 2001, and is former executive director of the Bill & Melinda Gates Foundation. Who says the NIH isn’t in-bed with the industry?

Cozy Ties Exposed

On page 1124, the NIH also fails to redact the remarks by Eileen to give Fauci a direct call, suggesting they already have each other’s phone numbers and Fauci is under undue outside industry influence and is not impartial:

This is just the tip of the iceberg, however. The public may be interested in the list of exposed emails in the FOIA. It has only been partially checked for errors, some may contain typos from the OCR, please refer to the original page number which is supplied by the OCR tool. Not all emails may have been extracted as it was Regex dependent.

I also encourage the public to do their own searches of the now searchable OCR text documents, as with 3000+ pages I will have likely missed other juicy aspects.

You can download the text document for the Fauci FOIA emails here. You can cross reference it with the original Fauci FOIA emails PDF hosted by the Internet Archive here. If you encounter any errors with the datasets please let me know.

Vote on the next article you’d most like to see:

Help make The Daily Beagle financially viable, where we expose corruption and use the resources to improve our reporting.

Learned something new? Think others need to be made aware? Share so others may learn!

Got your own views on the situation? Got an article idea? Spotted a mistake? Feel free to leave a comment below:

Fauci using the wrong “there” doesn’t instill any confidence in him.

Fauci will have made such evidence of his crimes against humanity even more inaccessible by using Sanscrit or Mayan language, to avoid legal action and inevitably facing prison (or hopefully a Death sentence) for crimes against humanity.

World Health Organisation = 'Bought and Paid For' by Bill Gates who states, "Vaccines are the most lucrative investment I ever made". Gates is the largest Invester (Influencer) in the now corrupt, WHO. Gates also believes the planet is vastly overpopulated. JOIN THE DOTS!

I remember reading the 'CoronaVirus' was patented as a 'Living Organism' many years ago, and the patentees were unknown individuals (Possibly 'stooges' for big pharma or Gates or Fauci or possibly the whole cabal?). Historically, it was not an ethically approved practice to try and Patent a Living Organism, but money talks so disciplines can be lowered, manipulated or financially compromised. The only reason such a Patent was invested in was financial = Big Pharma and other saw the profitability in modifying a virus (Gain of Function) in order to pretend they have a cure. Especially when they'd already bought a 'concession' to TEMPORARILLY avoid LIABILITY (Swine Flu = 1976) for which they had developed an equally devastatingly useless, deadly vaccine. LIABILITY must be reintroduced IMMEDIATELY in order to stop Big Pharma assuming they have an incontestable 'LICENCE to KILL'.

Also; 'F**k PayPal' for trying to fine their customers $2500 for questioning the DEADLY but useless Depopulation Program called Covid Vax! Mick from Hooe (UK) Unjabbed and ready to fight.