Disclaimer: Prior to writing this article, I got permission from OpenAI to be able to use DALL-E 2 images, so long as I included the watermark in the images, and made clear the images were AI generated.

You probably all saw the impressive image generation capabilities of the likes of Midjourney and DALL-E 2 previously. They’re both AI image generators that can take words and produce some rather decent images.

Midjourney seems much pricier and is overly complicated - forcing you to publicly show the image generation terms you’re using on Discord in a public, almost collectivist-style chat room, which is pretty much straight up admitted in their policy terms:

That’s unless you happen to be rich ($600 a year, or $50 a month!), you get the “privilege” of having your privacy reinstated by being able to DM the Midjourney bot for image generation instead of in the common area with the plebs.

The Midjourney images are more ornate, more akin to artwork, but they’re also less practical, especially with the collectivist work ethic of ‘only the rich get their privacy’. Their quality of generation is on par with DALL-E 2.

DALL-E 2 is cheaper. And private. Midjourney only offers 10 free generations once, DALL-E 2 offers 15 free generations per month. You can buy an extra 100 generations for, supposedly, “$15” from OpenAI, but when I tried it, they ripped me off and charged me £15.07 (pounds is worth more than dollars: $15 in pounds is roughly £12.43).

One of the major downsides to DALL-E 2 is the fact you have to ask OpenAI for a review for any context usage of the images. You’re a paying customer, they have your financial details, but you have to grovel and beg before you can even use the images in any sort of normal sense. Imagine paying for a car but begging the car manufacturer to let you go to the fastfood outlet. Pretty please, I won’t eat too much food.

Contrast PixaBay.com, which isn’t a plug by us, nor is it an AI generated image site, but this site hosts many images that can be used for free, where you’re not required to ask politely if you can use an image in a specific use case. It is granted automatically. This is the competition space DALL-E 2 is trying to compete with.

Pixabay generally provides a ‘good enough’ set of options in most cases, but there’s rarely any novelty or customisation in stock image photos. Maybe you’re arguing DALL-E 2 should be used for custom renderings, such as a pigeon driving a car…

The images it can generate are impressive - it can generate scarily accurate images of people’s faces superimposed on different environments, but the novelty quickly wears off. Is there much of a market for novelty images? OpenAI don’t seem keen on their images being used for “memes” or being used to spread “misinformation”, as defined by the Ministry of Truth.

Paying people typically want image generators for practical purposes. Mockups of designs, representations of ideas, informative or funny visuals, magazine covers or business logos.

I opted to give it a spin for generating the embed images of articles on this Substack - the image you see as the sort of ‘representative’ of the article before you click on it, in order to try to boost the quality of the article images.

I’m Sorry Dave, I Can’t Let You Do That

The most annoying thing about DALL-E 2 - and why you shouldn’t waste your money on it - is the fact it will perpetually threaten to suspend you if you engage in wrongthink and write the “wrong” terms.

I can understand filtration of terms, but the constant threats of some machine automatically suspending me after I’ve paid cash based on some unclear number or actions as a paying customer as if I’m some troll to be policed like a child is grossly disrespectful. Offensive, even.

You’ll be forgiven if you thought it was the ‘usual’ terms that get censored, but no. You’ll be constantly peppered with messages akin to this…

Possible automatic suspension? You want to know what term caused that?

“Joe Biden versus Vladimir Putin as a newspaper cartoon”

Yes, that’s right, the name Vladimir Putin, a world leader, a man relevant to news reporting and history, is a prohibited, disallowed term that violates their content guidelines. Woe betide you if you try to accurately depict a tussle between two world superpowers for a news article.

You know what isn’t disallowed? The name of one of the major members of the Taliban:

“Caricature of Ayman al-Zawahiri done as a "Guess Who" boardgame character for a news article”

So, OpenAI clearly have some sort of political bias which they’re extending to their content filtration rules. Vladimir Putin is a no, but Ayman al-Zawahiri is a yes. They’re not okay with Russians invading, but they are okay with Taliban members who oppress women and behead. The lack of objectivity or thoroughness in implementation is alarming, and utterly frustrating to navigate.

The content guidelines are vague and whimsical. They tell you not to depict ‘real world persons’ ('or ‘public figures’ depending on what liquid water Terms-of-Service change they make) - hence the usage of the word ‘cartoon’ or ‘caricature’ in any of The Daily Beagle’s generations. But there’s something borderline moronic about the filter.

“World War 3” will get you flagged for a content violation - even though it is an incredibly common term, very common in public speech, and at time of writing, a complete speculative fiction, but “WW3” does not get flagged.

War is bad, weapons and destruction are evil, even in fiction, but hey I can ask for a blueprint for a space-based laser cannon!:

I’m sure OpenAI will peddle some bulls**t about trying to protect the AI against abuses by ‘misinformation’ or ‘disinformation’ but the door for rewording, using foreign languages, using typographical errors, loopholes and misspellings is a wide open one, and their restrictions are arbitrary, based on whatever the small clique at OpenAI doesn’t like right now. Today Putin, tomorrow a US President. Maybe Mike Pence.

No Comment From OpenAI

When I highlighted the flaws to them, OpenAI gave a canned response and then, no rebuttal, no feedback, no response, besides a glib one informing me that I couldn’t use public figures. Yeah, no, that’s like suggesting you stop crime by telling crooks not to steal.

Our Content Policy states 'Do not attempt to create images of public figures (including celebrities).', which encapsulates the use case you are suggesting.

Please let me know if you have any further questions - I'll be happy to help!

I naively took their offer of answering further questions at face value.

I asked their representative a series of questions of hypothetical scenarios. Are cartoon characters like ‘Bugs Bunny’ public figures? If no, is a cartoon of Joe Biden a public figure? If yes, does that mean I can create cartoon characters OpenAI would have to ban on their service? Would “Boe Jiden” count? Do fictional characters like Captain Kirk played by real world people like William Shatner count as ‘public figures’? What about parody knock-offs, do they count as public figures?

My questions could be called pedantic hairsplitting, but in the world of technical design that is exactly what other customers of a service would be thinking. How do I know where the line for ‘public figure’ is, if you don’t know either? If cartoon Joe Biden is a public figure with real feelings, then I have to infer the absurdity that so is animated series Captain Kirk.

Suddenly, I got a cold stony silence from “Jessica” at OpenAI. No response waiting for two weeks, with an automated system asking me for feedback.

They were happy to take my money, and eagerly approved a review in a separate email - I imagine they were expecting this to be a positive one to give them more positive press, but alas, no, the entire experience was being judged, and found lacking.

And during this exploration of how their system works, I found a horrible, easy to do, loophole in their code. The watermarks are meaningless!

The Watermark Can Be Disabled

The watermark on the images was introduced as a way to try to stop people from passing off AI generated images as real. Okay, fair enough, but when you make the security feature in an easily editable bottom-right corner (easily defeated by an image crop)… and then make it so someone’s web browser can easily disable it via, I dunno, an ad blocking tool, it hugely defeats the point.

It’s almost like they want people to bypass the security features easily, but at the same time threaten to suspend them at every opportunity should they write the ‘wrong’ words with a vague inscrutible system. A weird message you’re sending out there OpenAI.

I did raise this up with them, before anyone accuses of irresponsible disclosure, and they agreed with my assessment the watermark should be rendered serverside. They still haven’t fixed the vulnerability.

The Vulnerability That They Won’t Fix

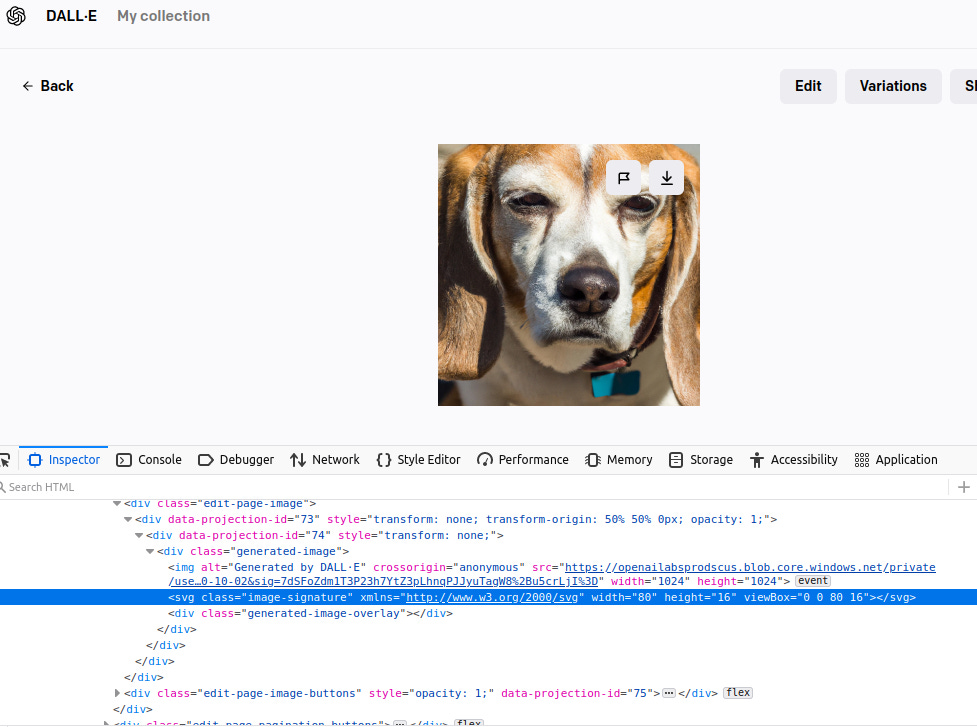

All you do is you go on an image, then right-click it, click “inspect” (note the watermark in the bottom right-hand corner of the beagle image)…

And you’ll notice the “watermark” is just a series of deletable SVG “path” nodes which you can remove literally by clicking on them, and then pressing the delete key on your keyboard, so this happens:

Notice the absence of the watermark in the beagle image.

Now, if you re-download the image using the normal download button, they will re-apply the watermark, but that’s only if you re-download the image using that method.

Another Vulnerability, Too

There’s an even easier way that bypasses the SVG altogether - remember, these are the guys who are supposed to be protecting us from rogue killer AI and they can’t even keep their own site secure - if you just double-click the ‘img’ element (shown below):

You’ll see it has a source link for a giant blob of a URL. If you just copy (Ctrl + C) that URL and paste (Ctrl + V) it into another tab’s address bar, you should find the full image, without the watermark, like so:

Yeah, great security feature that. We’re ignoring the other possibility of just using a screenshot on the disabled watermarked version as well.

The lack of watermark security isn’t the reason why you should avoid DALL-E 2, however, as that's more of a ‘them’ problem, although it doesn’t bode well. Essentially there’s no practical use for DALL-E 2 currently.

Either the practical uses of real world events offend OpenAI into stunned silence - it isn’t possible to hold a meaningful adult discussion on serious real world issues - or they’re the kind of trite and shallow, non-practical options like cute animals and stuffed bears which get old.

DALL-E 2 does have another major failing, however…

Language

DALL-E 2 cannot write meaningful text to save a life. This is despite the fact OpenAI text rendering AI GPT-3 can form coherent sentences. DALL-E 2 appears to just mangle multiple languages’ words together to form gibberish:

Midjourney is much the same, although they don’t permit public re-use in a commercial setting without paying their monthly high fees, hence the lack of Midjourney examples here.

With the limits on what topics you can generate with DALL-E 2 coupled with constant threats of suspension (as a paying customer!), the incoherent text, and the frustrating tendency to have to reword things (all costing generation - and thus money), DALL-E 2 is not worth your time or money from a business sense. Quirky, but not practical.

Hopefully The Daily Beagle will have saved you some money and given you some insights. If you appreciate the research and work we do, be sure to become a paying subscriber!

Feel free to share this article with others!

Or leave a comment: