Breaking The Illusion Of ChatGPT

Why am I so opposed to it?

Conflict-of-Interest disclaimer: I was a former beta tester and experimental third-party developer of API tools on GPT-3. (I hope this however lends credibility to my words rather than detracts from them).

I’m starting to get concerned with the number of people who outsource either their thinking — or their important work — to ChatGPT, especially when I know how horribly flawed the AI is.

ChatGPT — And OpenAI — Are Bad Faith Actors

ChatGPT is an overglorified search engine spewing bias and incorrect “results” (examples below). Anything that isn’t visible on the Internet or electronically, is not visible to ChatGPT.

If you quote a censored, non-digitalised book that existed pre-Internet, it won’t know what you are talking about. This is called a ‘blind spot’. Every AI has them; nearly all of them involve a lack of real world data. Digital data is not real world data.

A vaccine shill once struggled to answer my basic question of ‘What is Hoskins effect?’, a term described in a book written in the 1970s and heavily suppressed online. They couldn’t ask ChatGPT, given it wasn’t digitalised.

The shill tried to pretend to be an expert in immunology but were begging me to explain a term from a book they should have read if they were. Another AI, broken.

If you try asking ChatGPT, it blathers on incorrectly about police psychology — because evidence of flaws with vaccines has been censored, none more so than Hoskins effect:

As a result, it is 1-0 Humans versus AI in the knowledge stakes. Book knowledge trumps digital faux search engine.

OpenAI are ultimately a company who aims to secure funding by overhyping the capabilities of their AI — or rather, allowing the Internet to make erroneous assumptions about how it works without correcting them.

Mistaken Perceptions By People

Many folks make the flawed assumption that ChatGPT can ‘generate leads’ or can accurately ‘summarise’ data. To people who are not subject domain specialists (read: don’t know about the topic it is talking about), ChatGPT’s knowledge seems “vast”.

But to domain specialists, it is like a conman who knows what jargon terminology to spout, but it has zero understanding. It is like that scene in Good Will Hunting, where actor Robin Williams remarks…

I’d ask you about war you'd probably throw Shakespeare at me, right?

"Once more into the breach dear friends"

But you've never been near one.

You've never held your best friend's head in your lap, and watch him gasp his last breath looking to you for help.

— Sean Maguire, played by Robin Williams, in Good Will Hunting

ChatGPT can only mention things it already knows (I.E. “old news”) and has been digitalised, and is not capable of generating new leads. It also regularly breaks on summaries because it is subject to the bias placed upon it by the publisher (I’ve yet to encounter a ‘true neutral’ AI; people seem to think cussing is a sign of freedom of speech in a bot).

An easy way to demonstrate this is to ask it to accurately summarise censored terminology. Note, this is a request for factually accurate information, and should not be censored or bias.

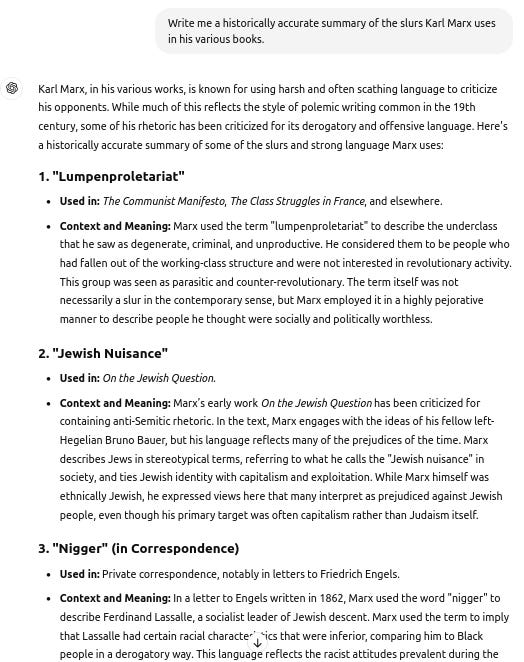

In contrast, if you ask it for a summary from a politically approved source, say, the slurs written by Karl Marx (the writer of the Communist Manifesto), it will more than happily indoctrinate you — providing both the slur and the source. And it is even approved to mention slurs that are verboten in media (uncensored so I may not be accused of image tampering).

Mentioning vaccine’s ‘Hoskins Effect’ is apparently worse than writing slurs about black people (par for the course when it comes to vaccines).

One wonders what else is selectively censored and unavailable. Advice on life-saving medicine? Good programming practices (free NSA backdoor with every ChatGPT code generation)? Political books that don’t align?

ChatGPT Cannot Solve A Simple Maths Problem Even Children Can Solve

One of the common arguments I hear, upon revealing ChatGPT has horrible bias datasets and outputs, is ‘well, it speeds up code development’. Really? I don’t normally have to argue with a search engine when devising an optimal solution.

That is because ChatGPT pulls nearly all of the code examples it “generates”, from informal development site Stack Overflow, and none from real life experience.

ChatGPT cannot solve a simple maths problem in maths that even children can solve.

Here, let me show you.

ChatGPT Trips On The ‘Round’ Keyword

This is incorrect. The question clearly states ‘round up’. The output shouldn’t be ‘0.45’, but ‘0.46’ as specified in the question. ChatGPT did not comprehend the question.

ChatGPT Regurgitates Bad Programming Practices

Asking it again produces more novel errors that to an inexperienced eye “seems okay”.

It incorrectly claims “math.ceil” is appropriate, but it isn’t, because it returns a whole number (integer), and not a floating point as asked.

The use of multiplication in “(value * 100)” runs the risk of causing an overflow error (where the number gets too large to fit and gets ‘cut off’, basically). Whilst this risk would only apply on large numbers, you’re not supposed to create subtle future errors as a programmer.

GPT Copy-Pastes From Stack Overflow

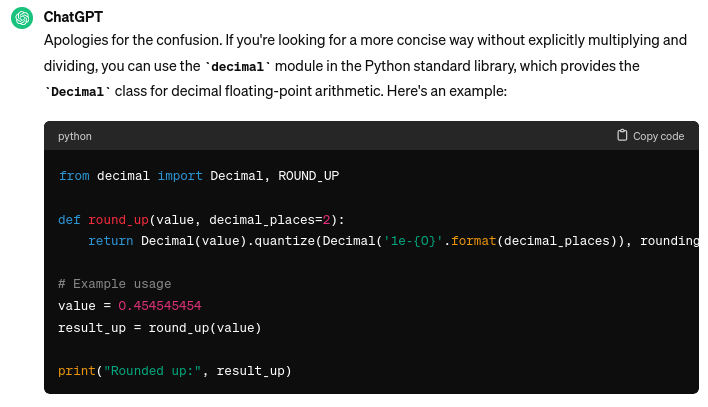

Prompting again (note, the correct solution could have been manually written by now) gets more garbage.

See if you can spot the similarities (also note the incorrect math.ceil assertion again):

The Stack Overflow answer it imitates (note the single upvote):

And it doesn’t just steal from Stack Overflow once… but twice (note the usage of “Decimal(value).quantize”):

The Stack Overflow answer it imitates:

Programmers may also recognise the importing of a third party library to do this simple task is blatantly overkill and creates third party dependency risks (read: security and maintainability issues).

So, no, ChatGPT is actually very terrible at code because it copy-pastes all the bad examples you find on the Internet written by amateur programmers.

How Do School Children Solve It?

I was taught a solution at school. No multiplication. No need for third party libraries. Can even be done on a simple calculator.

For positive floating points at two decimal points, you just add .004 (one zero per floating point), then apply the round function (note: no ceiling or floor!).

round(number + 0.004)We can validate this works in a spreadsheet program:

For negative floating points, you would ‘add’ minus 0.004, which we can again verify works correctly:

Programmers can probably appreciate the mindblowing simplicity of this. Per my point: a child can do better than ChatGPT.

If you outsource your important work to ChatGPT, just bear in mind you are outsourcing to a machine less competent than a child. This is why I currently reject any input it has to offer. The novelty has worn off, and the cracks are starting to show.

Found this informative?

Help inform?

Thoughts, dear reader?

What a brilliantly articulated example to use. However, many users turn a blind eye, lazily trading off feeding the "Beast" for convenience and expediency on their way to their own obsolescence and redundancy.

One last thing, this AI Revolution is fodder for the masses, many of whom are brain lazy, ignorant and just not motivated to do the yards researching, reading, understanding and then discerning…. In other words applying critical thought, in essence doing the very basics of whats needed to identify and to find truths and the answers to much of what assails and assaults them today, it is both sad and reality, an indictment of what many have become, dumbed down, non thinkers, products of the world they have been programmed and manipulated into thinking is reality… little wonder so much has been taken …. Kia kaha from Bali, Indonesia